Ramblings about video game AI - Part 2 - Dissecting the brain

Braaains!

Welcome to the second part of our ongoing series about the AI systems in Project Slide. This time we’ll take a closer look at the core of any AI agent, the brain. The brain doesn’t step on the gas pedal of the vehicle, nor turn the steering wheel, nor fire any guns. It delegates all of those things to other subsystems. However, those other subsystems won’t do anything without receiving orders from the brain.

From a technical perspective, the brain is a C++ class attached to the vehicle object. The game engine uses a component model which closely resembles the “classic-style” Unity component model. The brain is one such component, and it is to be considered the “entry point” to the AI of a vehicle. When a level loads, all vehicles that are supposed to act as AI agents activate their brains. This, in turn, activates the rest of the AI systems for that vehicle. Deactivating the brain, reverts the vehicle to “just a vehicle” (which a player can drive, for example).

The brain can independently make decisions and take actions, but it can also communicate with other agents’ brains through messages. As we already covered in the previous part, the brain can be scripted to carry out very specific tasks such as “go to position”, or more complex behaviors such as “patrol this area” can be activated. While such a behavior is active the brain runs its decision making logic, making decisions about how to best carry out the task at hand. It might also switch from one behavior to another. For example, while in combat, if the vehicle suffers enough damage the brain might switch to a fleeing behavior in order to escape its enemies.

The brain is split into two main parts, Decision making and Behaviors:

Decision making

This is the part of the brain that decides what to do next, on a high level. For example, in a combat encounter the decision making system can monitor the health of the vehicle and decide to switch from a combat behavior to a fleeing behavior when the odds are stacked against the agent. This part is modeled as a finite-state machine.

Behaviors

These are essentially recipes for carrying out a specific task. In the patrolling example, the behavior would tell the agent to look for the next waypoint on the patrol route, drive towards it, switch to the next waypoint, etc. The behaviors are modeled as behavior trees.

In addition, there are a few systems that aren’t exactly part of the brain, but are instead used by the brain:

Perception

What the AI can “see” or “hear”. For example, when an AI agent is patrolling an area the perception system is responsible for reporting to other parts of the brain when an enemy is near.

Movement

This system is responsible for maneuvering the vehicle around in a way that makes sense for the current situation. Compared to moving around an AI character, driving a physics-based vehicle is a fair bit more complex and we will spend a lot of this blog series delving into this part.

Navigation

The vehicle doesn’t just need to know how to drive, it needs to know where to drive. The navigation system provides the vehicle with a path, called a driveline, to drive along to reach its destination. This involves things like obstacle avoidance.

Weapon control systems

These systems allow the AI brain to aim at a target, fire weapons, reload etc. Things go boom!

Messaging

Whenever an AI agent wants to talk to another AI agent it sends a message. For example, if an enemy agent spots the player it might tell nearby allies to attack the player.

We’ll talk about the decision making and behaviors a bit more, and we’ll look at the other parts in later blog posts. First though, let’s talk a bit about how I ended up with this architecture.

Most images have a larger version available. Try clicking on them.

All the buzzwords!

Before writing anything I did a little bit of research on how to design the structure of the AI system. I wanted something reasonably simple so I could get started quickly, but flexible enough to support the behaviors required by the game. I knew I would probably use Finite-state machines (FSMs) in some capacity, but what else do people use?

When googling “game AI architecture” you come across interesting topics such as Goal-Oriented Action Planning (GOAP). In this model, agents will be given goals and, using a set of actions available to them, figure out a plan how to reach that goal. This AI model was popularized by the game F.E.A.R. It is, in some ways, a very elegant solution enabling agents to come up with new unexpected ways to reach their goal. However, it didn’t quite feel like the right approach for Slide, mostly because of the amount of up-front work you need to do to get anything working. If you are interested, the YouTube channel AI and Games made a video about GOAP fairly recently.

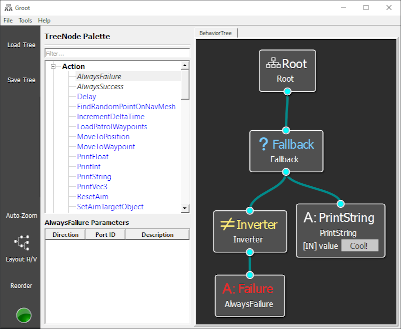

Behavior Trees (BTs) was the next technique I looked into. I had a vague idea of what a BT was about, having worked at companies using them for building AI, but I had never done any BT work myself. You won’t get very far into researching game AI these days without running into BTs, seeing as Unreal uses them. Unfortunately, Unreal’s seemingly excellent BT editor won’t help me and my humble custom engine, so I started looking for another editor to use when practicing working with BTs. After a bit of searching I found Groot, an open-source BT editor on github (image on the left). Jackpot!

The next step was to start designing AI behaviors and logic as BTs. Groot uses an xml-based format for its documents and you can create new node types by putting them in a “palette” file which you load either on it’s own or as part of your BT. This allowed me to start designing nodes without having to write the underlying C++ code. To actually execute BT nodes in the game they need to be implemented in the game’s programming language, in this case C++.

I am not going to cover how BTs work in detail as there are excellent articles on the web about that. In short, they are good for describing a series of steps to carry out in order to get a task done. They are highly reactive and, when designed correctly, can be much more readable than Finite-state machines (FSMs). No matter how I tried though, I could not wrap my head around how to use them for decision making.

Say you have an AI agent with four states: idle, patrol, combat and fleeing. The agent can go from patrolling to combat, to fleeing, back to patrolling, to combat again etc. To model this using a BT you would need to consider each state and a set of requirements for entering that state. On each update, you would check the requirements and decide to enter the state (or stay in it) or not, then check the next state and so on. This would lead to horrible spagetti trees before long. Also, trying to move from a specific state to another specific state in a specific situation gets really tricky, because the states would be fighting each other depending on where they were in the tree. These were things I knew perfectly well how to do in an FSM. After struggling for a while I decided I don’t like BTs and moved on.

Back to basics

At this point I was a bit fed up with the situation and decided to start working on the simplest possible solution, to get a bit of development momentum. I decided to focus on the movement and started working on that part (which we will cover in later chapters). For the AI vehicle to move anywhere, the brain simply needs to tell the movement component where to go (and a few other things which aren’t important right now). In other words, the brain logic is very simple for rudimentary movement.

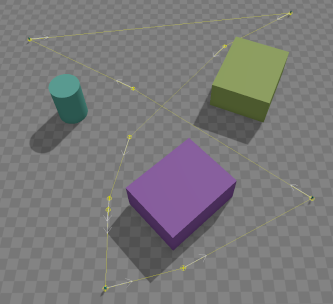

The image on the right shows a very simple patrol route in the level editor, authored by placing four waypoints (the small turqoise things in the “corners” of the path). The white arrows show the direction of the patrol route. Let’s break up the patrolling behavior into a set of actions to take:

- Load the waypoints of the patrol route into memory.

- Look up the waypoint closest to the vehicle (skipping to the next one if we are “past” the closest waypoint)

- Move to the waypoint.

- Look up the next waypoint on the route.

- Move to the waypoint.

- Go to step 4.

“Okay, that’s not that bad. I bet I can write that in C++ very quickly.” And that I did. I also implemented ‘Go to position’, ‘Follow game object’ and a few others with relative ease. I implemented a system with tasks, where the AI agent has a queue of tasks to carry out. When a task is complete it starts with the next one etc. That way you can tell the agent to “go here, then follow that vehicle” and so on. The patrolling logic above was packaged into a patrolling task.

And to be honest, the system worked quite well. It was simple, performant, and everything so far had kinda worked. However, I was already seeing the emerging spagettiness of the code in some of the tasks, and I found myself re-implementing certain parts of the code for each task, while still finding it difficult to split the shared code out into a base class or separate modules. Also, I had a nagging suspicion that the task queue approach wouldn’t scale well to complex multi-agent combat behaviors and such, and that I would have to constantly throw away the queued-up tasks and reschedule everything which defeats the point of the queue in the first place.

Finding the middle ground

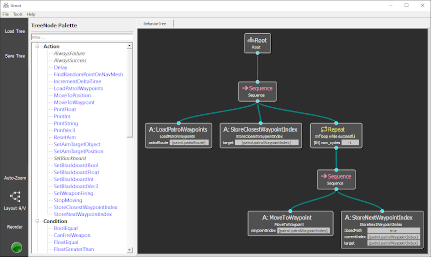

I was halfway through implementing the first combat task when I realized that the logic I was putting into the tasks was exactly the kind of thing behavior trees are good at, ie. a series of steps required to carry out a task. If I ditched the idea of one monolithic BT for the entire brain and went with smaller per-task BTs instead everything would be simpler. I quickly sketched up the patrolling behavior as a BT (see image on the left).

Compare the patrolling BT with the list of steps above. They are almost exactly the same. However, with BTs I get to design the tasks visually and over time I will build up a library of reusable BT nodes which can be used when designing new tasks. With this, I scrapped the task system and replaced the task code with a bunch of BT nodes instead. I also stopped using the “task” term in favor of “behaviors”, but they are essentially the same.

While trying to find out more about how to use BTs effectively I found an article by Bobby Anguelov in which he makes many of the same points as me. Obviously, Bobby is an accomplished AI programmer which means that he knows what he is talking about, while I am just flailing around trying not to do anything stupid. Also, Bobby published his article before I even properly started working on the AI, so all credits to him. It is nice to have a plan which is backed up by someone else though!

The AI archictecture now looks something like the image on the left. As stated before, the decision making system and the behaviors can be considered part of the brain. The brain receives input through script commands, messages from other AI agents’ brains, as well as by perceiving its environment, ie. “sensing” other objects, or lack thereof. The decision making system then processes the data and activates a behavior which uses all or some of the systems available to them, such as movement, nagivation etc. Note that even simple commands such as “move to position” now activates a BT rather than communicating directly with the movement systems like the old task queue-based system did.

Allright, so we have concluded that BTs aren’t a good fit for the decision making system. What should we use then? Well, it depends. Currently, the most complicated brain is for the scout vehicle, and it uses the FSM framework provided by the game engine to set up a few states, namely Idle, Patrolling, Combat, Fleeing and DrivingToTarget. It then defines transitions between those states and triggers those transitions as appropriate. In other words, it is currently hard coded C++. There are no requirements for the brain other than it needs to be a scriptable game object component, which means that I could technically implement another vehicle’s brain using a different system. We’ll see, maybe I’ll experiment some more. The behaviors don’t know what kind of brain are executing them, which helps with code reuse.

Oh, Behave!

So far, we have discussed what behavior trees are good for and how we are using the Groot editor to author them. What about actually executing them at runtime? As it happens, Groot has a sister project called BehaviorTree.CPP. It is an open-source C++ runtime library for executing BTs. It is made by the same people as the Groot editor, and it can directly read the BT files created with Groot. The library is targeted at robotics rather than games and there are certain things I don’t agree with, such as not being able to override the memory allocators used by the library or the use of exceptions, but overall I have been quite happy with it. The library has allowed me to start using BTs quickly and the community has been friendly and helpful.

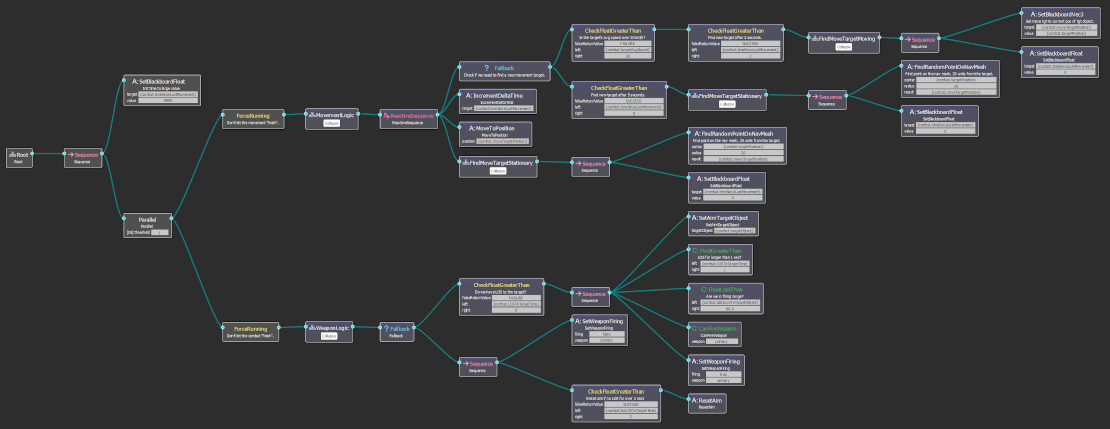

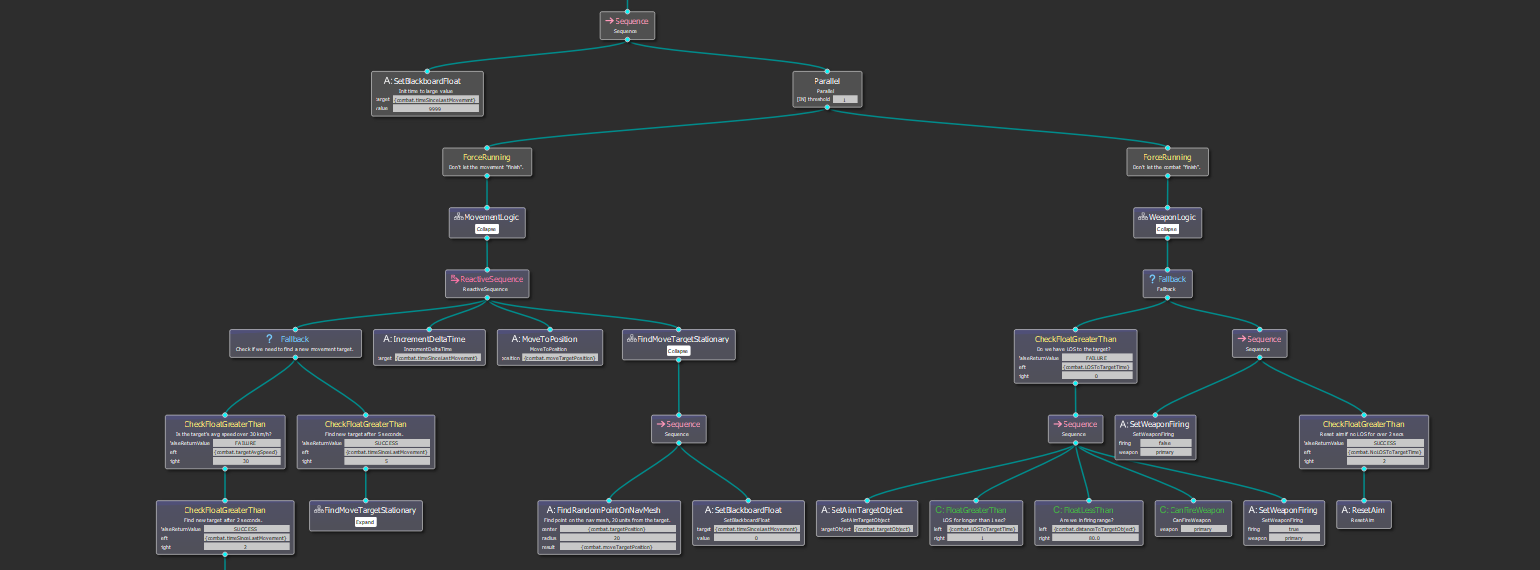

One defining feature of BTs is code reuse, not just between different trees, but also within the same tree. The image above shows the current version of the scout AI combat behavior. Click the image to open the full version. You’ll see that some nodes have a “collapse” button. These are subtrees and they can be used in multiple places within the same tree. This allows you to define small pieces of behavior and use it over and over without having to touch all places when making changes. It’s great. It also allows you to “hide” away parts of the tree you are currently not interested in, eg. when you are working on another branch. Groot allows you to create subtrees of existing branches with the click of a button and merge that tree back into the parent tree later.

The combat behavior above is already a bit more complex, so let’s break it down and see what actually happens. The attacking vehicle tracks how fast the target object (eg. vehicle) moves. If it moves faster than 30 km/h the attacker will try to chase the target by updating its target position every two seconds. If the target moves slower than 30 km/h (or is completely stationary) the attacker will look for a random point close to the target every five seconds and move towards it. This essentially means it will keep moving at all times, trying to stay close to the target.

While this is happening the attacker will try to fire at the target. It starts by checking if it has a line-of-sight (LOS) to the target object. If it does, it will aim at the target, and when it has maintained a LOS for over a second it will start firing its primary turret, provided it is withing range (currently set to 80 meters). If it doesn’t have a LOS to the target it will keep aiming at it for two seconds. If it still doesn’t have a LOS it will reset its aim, moving the turret back to the rest position. This allows the attacker to keep its weapon roughly aimed at the target even if another vehicle or obstacle passes between the two for a brief moment, but it won’t magically be able to keep aiming at the target if they can’t see each other for a longer time.

Now let’s see what this looks like in action. Obviously there are lots of things missing, such as weapon reloading, but we can already see a basic form of combat happening. The faint green line shows the direction of the bullets. We don’t have proper bullet trails yet. Since the hauler is not moving anywhere in the video we don’t see the chasing behavior.

Earlier I said that I won’t go into how BTs work, but I’d like to talk a little bit about how data flows between the brain code and the behavior (tree). You might have wondered about those strings enclosed in curly brackets, eg. {combat.timeSinceLastMovement}. Those are called Blackboard Entries and they are essentially variables in a key-value store which is accessible by both the BT nodes and the code using the BT. When instantiating a BT using BehaviorTree.CPP, the library creates an instance of a blackboard for the BT to use. Nodes can then read and write values from/to the blackboard as needed. If you want, you can give the BT an already existing blackboard to use, rather than creating a new one. This way, trees and/or subtrees can communicate with each other through the shared blackboard.

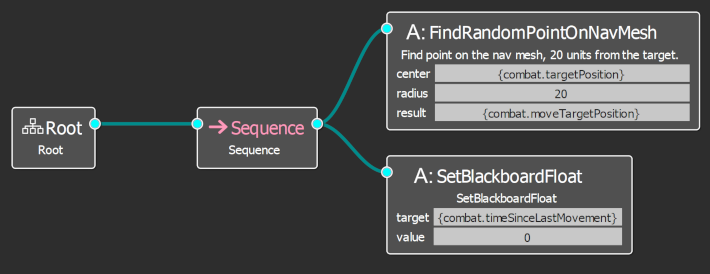

The image on the right shows the subtree called “FindMoveTargetStationary”. If you look carefully you can find it embedded into the full combat BT above (in multiple places!). It will search for a random position, within 20 world units away from the combat target (eg. the enemy vehicle the AI is trying to destroy), and write that position to the blackboard. It does it by reading a blackboard value called “combat.targetPosition” which is written by the brain, finds a random point within 20 world units from the target, and writes the result to another blackboard value called “combat.moveTargetPosition”. Finally, it sets a third blackboard value called “combat.timeSinceLastMovement” to zero.

Using the blackboard you can design your nodes without having to hardcode sources and destinations of the values they read or write into the node logic. For example, I might want to use the FindRandomPointOnNavMesh node in another behavior tree. It could be use to implement a behavior which makes a vehicle follow another one. If the target vehicle came to a halt you wouldn’t want the follower to run head-on into the target vehicle, but rather select a point nearby to stop at. FindRandomPointOnNavMesh with a suitable radius could be used for that.

Some people seem to like the idea of using blackboards for information sharing between AI agents. For example, an agent might write “The player was last seen at position X” to the blackboard and another agent might read the information from the blackboard. They, in turn, might respond with a message of their own. I don’t currently use blackboards for this. The game engine Slide is using has a very efficient message passing system which is the preferred way for game objects to communicate anyway, so I am using that instead. This way, parts of the codebase that has no idea what a blackboard is, engine code for example, can talk to the AI agents.

Friend or foe?

The last thing we will cover today is how the agent knows if an object is to be considered friendly, hostile or neutral. This is particularily important in the perception system. If an AI agent is patrolling an area and another vehicle crosses its path, the agent needs to know how to react. Every object that can be considered friendly or hostile has a Faction ID which can be queried at runtime, or even changed. Additionally, there is a table of information regarding which factions are friendly, neutral or hostile towards one another. At the time of writing all of this is dynamic information, ie. an object can change factions during the game and factions can form alliances and start wars.

It is quite unlikely that faction alignments will change which means that the information can be hardcoded and/or inlined, but it is fun to have the option for now. On the other hand, individual objects’ faction IDs will most likely be mutable in the final product. For example, a vehicle out in the world should probably be considered netural towards everyone as long as no-one is driving it. As soon as the player enters the vehicle (changing vehicles is a part of the core gameplay) it should switch to the player faction so that enemies will react accordingly.

Until next time…

Whoa, that got long. I hope this has been interesting or at least mildly entertaining. In the next part we will look at navigation. See you then!